Apr 1, 2010 | News

by Alberto Carrara | translated by Adrian Lawrence

What would happen to a man if a spear pierced through his face entering through his jaw and exiting through his skull?

We would probably say that this human being in the worst case scenario would die a victim of the accident. Or perhaps you could expect him to go into a coma, possibly reversible, although with multiple injuries. After a long period of convalescence, with luck and all the help of modern medicine, this man might be able to recover from the injury yet with various physical disorders. This would be the happy story.

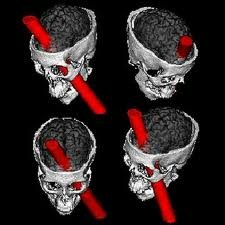

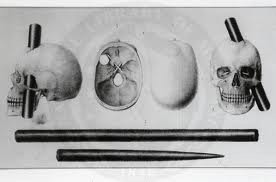

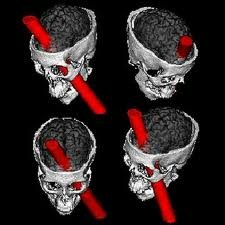

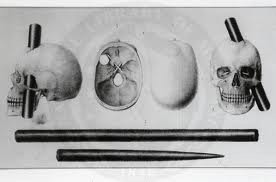

But rarely in the history of medicine could one imagine the eccentric case of Phineas Gage (1823-1860). September 13, 1848 marks an occasion of great significance in the history of neuroscience. Twenty-five-year-old foreman, Phineas Gage, working on the construction of railways in northern New England (Vermont) suffers a serious accident. On the account of an explosion, an iron beam three feet long and three centimeters wide penetrates part of his face, piercing his jaw at the zygomatic arch, then passing through the cranial cavity and finally, breaking the frontal bone.

His friends were greatly surprised when Gage, who didn’t die on the spot, after initial and understandable dizziness, came to himself to such a point that he could narrate in minute detail the accident to the doctor who attended him in first aid. Gage lost a large amount of prefrontal cortex, but survived the injury and regained his physical health.

His friends were greatly surprised when Gage, who didn’t die on the spot, after initial and understandable dizziness, came to himself to such a point that he could narrate in minute detail the accident to the doctor who attended him in first aid. Gage lost a large amount of prefrontal cortex, but survived the injury and regained his physical health.

The story, however, does not end here. Phineas Gage was never again the same Gage as in the moment preceding the accident. How come? Although there had been no sensory or motor injuries, nor had they detected changes in the language or sensory memory, Phineas Gage’s personality changed noticeably. John Harlow (1819-1907), the doctor who treated Gage in the Massachusetts General Hospital in Boston, collected his remarks in a historical article about this patient.

Harlow described his patient as follows: “His physical health is good, and I am inclined to say that it has recovered … The balance or equilibrium, so to speak, between his intellectual faculties and animal predispositions seems to have been destroyed. He is impulsive, irreverent …, shows little deference to his colleagues, is intolerant to their limitations and the advice they offer him when it doesn’t match up with his desires. He is sometimes very stubborn, but most of all, whimsical and wavering. He makes many plans to better himself for the future, which he ends up leaving in nothing other that the organizational stage … In this regard, his mind has completely changed to the point that his friends and acquaintances say: he isn’t Gage anymore” (Principles of Neuroscience, Elsevier, Madrid 2003, p. 519).

This story marks the first concise scientific description of the main symptoms associated with the destruction of the prefrontal cortex in a human. After the accident and two months in the hospital, Phineas Gage lived for more than ten years and died of epilepsy at thirty-seven years of life. But Gage was already someone else. But who?

This story marks the first concise scientific description of the main symptoms associated with the destruction of the prefrontal cortex in a human. After the accident and two months in the hospital, Phineas Gage lived for more than ten years and died of epilepsy at thirty-seven years of life. But Gage was already someone else. But who?

Today, a few die hard reductionist scientists speak of the discovery of the area or part of our brain responsible for our moral judgments. Gage’s personality changed because his brain changed. Indeed, after Gage’s death, his skull was donated to the Warren Anatomical Museum of Harvard University. Numerous studies came to very accurately identify the area of his brain damaged by the incident. This area was mainly the left part of the ventral medial prefrontal region.

Although this area of the cerebral cortex is related to personality and numerous scientific studies increasingly show it to be the best candidate for the neural correlates associated with moral behavior, this does not mean or imply that this area is the cause of morality in man.

Juan Lerma, a prestigious Spanish neurobiologist and director of the Institute of Neurosciences of Alicante, says that neuroscience is teaching us to see that we humans “are what our brain determines us to be, and this will have an influence in education or in the management of violence” (interview of Diario Médico, Friday January 4, 2008). This way of thinking, quite widespread in our society, considers our brain as the biological structure that on the one hand defines the ultimate essence of being human, and on the other presents itself as the factor to shape our future. Many today tend to believe that we are our brain.

Neuroscience research seems to bring with it a “plus”, as was well pointed out by José Manuel Giménez-Amaya and José I. Murillo from Navarra University (Neuroscience and freedom: An Interdisciplinary Approach, Scripta Theologica, vol. XLI, fasc. 1, January-April 2009, p. 26), that “adds the feeling of unmasking the most human part of man, and putting it under our control.” This kind of research is so attractive because it proposes to tackle the most ambitious goal of experimental science, namely: to explain rationally and empirically the great questions about our unique human condition.

Neuroscience research seems to bring with it a “plus”, as was well pointed out by José Manuel Giménez-Amaya and José I. Murillo from Navarra University (Neuroscience and freedom: An Interdisciplinary Approach, Scripta Theologica, vol. XLI, fasc. 1, January-April 2009, p. 26), that “adds the feeling of unmasking the most human part of man, and putting it under our control.” This kind of research is so attractive because it proposes to tackle the most ambitious goal of experimental science, namely: to explain rationally and empirically the great questions about our unique human condition.

There is no doubt, however, that current neuroscientific data are not even at the level to trace the overall functioning of our brain in its cognitive, affective, volitional, emotional, and memory processes. Scientifically and rationally speaking, therefore, one cannot “rely solely on these results to draw unitary conclusions about the actions of man” (Neuroscience and freedom: An Interdisciplinary Approach, Scripta Theologica, vol. XLI, fasc. 1, January-April 2009, p . 34).

The American philosopher Alva Noë, in his book entitled Why We Are Not Our Brain puts forward a balanced criticism of the certainties of neuroscience and the optimism so contagious that surrounds them. The idea that runs throughout the paper is that man is not his brain, the human being is not locked into its materiality, even biologically speaking, but “the phenomenon of consciousness, as well as life, is a dynamic process involving the world.” Man lives, de facto, outside his head.

Our moral capacities, our abilities to make and bring to fulfillment ethical judgments, need physical and biological structures which are intact. That is, the brain, and in a peculiar way, the left medial ventral prefrontal cortex, is the necessary condition so that humans can maintain moral behavior suitable to their nature. “As a window does not cause light, but is a necessary condition so that the light caused by the sunshine can enter and illuminate” a room, as says the anthropologist Lucas Lucas in his book Explicame la Persona, thus the causal relationship between morality and the brain is not that the brain organ is the cause of the morality of man, but an intact brain structure is the necessary condition so that man can express his morality (Explícame la persona, ART, Rome 2010, p. 64).

Phineas Gage, after the injury, continued being the same person he was before, but the manifestation of his personality was altered by the pathological condition generated as a result of the accident that impaired his left cerebral cortex.

Phineas Gage, after the injury, continued being the same person he was before, but the manifestation of his personality was altered by the pathological condition generated as a result of the accident that impaired his left cerebral cortex.

In conclusion, together with the study of the chemical and biological processes of the human brain, so necessary in the understanding of its functioning, it is imperative that contemporary neuroscience broadens its horizons by integrating the plain observable data with a reflective understanding of the human person based on an integral philosophical-anthropological vision of man.

Scientists themselves perceive in the study of the human mind the need to distinguish between mind and brain, between the human person who acts morally and neurobiological factors that sustain his intellect, will, and affectivity, which form part of his actions. Science itself sees the great mystery of a human dimension that transcends mere brain physiology and that, after all, is the very foundation of our dignity as beings with a peculiar “position” in the cosmos.

Apr 1, 2010 | News

by Alberto Carrara (translated by Adrian Lawrence)

What is a cloistered Carmelite nun doing kneeling with her eyes closed, connected to dozens of cables plugged into an EEG machine?

The question seems legitimate. The answer, however, is not so simple.

Indeed, many nuns and Buddhist monks have been recruited starting from the ’90s for neuroscientific studies on the religious experience. One must remember that the years 1990-2000 were designated by the U.S. president “the decade of the brain.” Along with the justified enthusiasm to achieve in a short period of time the unraveling of all the mysteries relating to our brain, the decade 2000-2010 has testified to the impressive growth of experiments for neurobiological research. Such development and advancement of global import, the result of interdisciplinarity and the cooperation between different scientific viewpoints, hasn’t stayed in the laboratory, but has literally invaded our daily lives.

Indeed, many nuns and Buddhist monks have been recruited starting from the ’90s for neuroscientific studies on the religious experience. One must remember that the years 1990-2000 were designated by the U.S. president “the decade of the brain.” Along with the justified enthusiasm to achieve in a short period of time the unraveling of all the mysteries relating to our brain, the decade 2000-2010 has testified to the impressive growth of experiments for neurobiological research. Such development and advancement of global import, the result of interdisciplinarity and the cooperation between different scientific viewpoints, hasn’t stayed in the laboratory, but has literally invaded our daily lives.

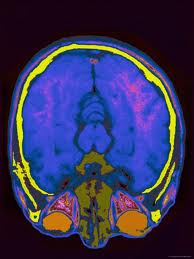

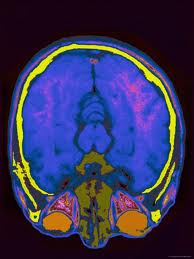

Today the technological capability to visualize brain areas activated by determined circumstances has produced a veritable sea of studies that have created differing results.

The development of neuroimaging techniques, among which stands out the famous fMRI (functional magnetic resonance imaging), could not be confined to the mere, but crucial, clinical area useful to diagnose brain diseases. Studies multiplied according to the fantasy and genius of each scientist. So, from wanting to understand the neurophysiological basis of human activities such as memory, language, vision, and personality, they began to investigate, as José Manuel Giménez-Amaya stated, “the most distinctively human thing of man”: religious experience (“God in the brain? religious experience from neuroscience,” Scripta Theologica magazine, March 2010).

As in all social milieu the suffix neuro is already taking the role to promote, sell, convince, and in the wake of this real neuromania, new words have been proposed and have started to circulate side by side such as neuroeconomics, neurophilosophy, neuropolitics, and also the term neurotheology.

What is this all about? And above all, what does neuroscience reveal about God and our natural tendency towards the transcendent?

One should first consider briefly some of the experiments in this area to judge the conclusions and interpretations made by contemporary scientists.

Dr. Mario Beauregard from the Department of Psychology at the University of Montreal in Canada published in 2006, in number 405 of the Neuroscience Letters, an article on the neural correlates of religious experience. The experiments described involved perfectly healthy cloistered Carmelite nuns, who were requested to recall their experiences of union with God in prayer. While they were doing this, the scientists were recording the brain activity of the sisters through the use of fMRI and electroencephalography. Two years later, in 2008, the same scientist in the same journal published a contribution outlining the data of electroencephalography during mystical experience.

These studies, as several others that cannot be described here in detail, arrived at the conclusion that during religious experiences many brain regions become activated, especially around the cortex. This involves complex neural networks, cognitively structured, with significant activation compared to a standard (nuns who were not praying) of the famous AAA (Attention Association Area), the brain area associated with concentration. The scientists also showed reduced activation of the OAA (Orientation Association Area) or area of association and spatial orientation. Already in 2004, Olaf Blanke, from the Department of Neurology of Geneva (Switzerland), had published in the journal Brain an interesting work on the involvement of this area with “out-of-body” experiences.

As scientific data these results precisely show this. During a spiritual experience many areas of our brain are modulated (activated or deactivated). What is measured is not the mystical experience itself, but intense intellectual-emotional activity. The wealth of the religious experience, natural in every human being, is manifested in a bodily dimension at the level of complex neural networks that come into play.

From a scientific datum one often passes to its interpretation that in the end can even become a real manipulation. Thus Dr. Andrew Newberg of the University of Pennsylvania in Philadelphia (USA), performing the same experiments with Buddhist monks and Franciscans, and reaching the same scientific results, wrote a book entitled God in the brain: Why God Won’t Go Away, which reduces religious experience to something produced by our brain. Newberg and other reductionists interpret the data of the experience of the transcendent as if the brain itself was the direct and ultimate cause of such experiences. Hence one could conclude as does the “father” of contemporary neuroscience, Michael S. Gazzaniga: if our brains produce the religious experience, God is in the brain, and in the end, the brain becomes God. Simple, almost a perfect syllogism. This view was successfully popularized by the Spaniard E. Punset in his book The Soul is in the Brain.

The truth is, unfortunately for these types of scientists (who are the minority), that neuroscientific data do not show directly the human experience of God, but rather try to identify its neurophysiological bases associated with the phenomenology of such religious experience.

The false interpretations of the results at the level of functional magnetic resonance imaging are not easily unmasked by the lay public. So when one interprets data, it requires great care and great balance. Remember that human experience, precisely for its being “human,” is characterized by remarkable richness and complexity.

An important statement of Thomas Aquinas comes to mind, relevant today more than ever in the context of the reduction of human beings to mere materiality: “hic homo singularis intelligit” (S. Th. I, 76, a.1, c.), it is the man who thinks. It is not his brain that has an experience of God, but it is he himself, in his totality, who is in contact with a reality not measurable or empirical. A truth cannot be imprisoned by an MRI machine, even if it is “functional.” According to the Viennese philosopher Günther Pöltner this approach of Thomas to the practical life represents a contribution to the contemporary debate imbued with psychological and neurological reductionism.

In conclusion, if we understand by the term theology what it has always meant, intellectus fidei (scientia fidei or fides quaerens intellectum), that science, that knowledge of the ultimate foundation of everything, that is, namely, God by the light of faith, then one can surely see the inappropriateness of the concept neurotheology.

In conclusion, if we understand by the term theology what it has always meant, intellectus fidei (scientia fidei or fides quaerens intellectum), that science, that knowledge of the ultimate foundation of everything, that is, namely, God by the light of faith, then one can surely see the inappropriateness of the concept neurotheology.

What is now considered neurotheology is a reflection on the neuroscientific results, fruit of a religious or mystical experience seen from its intellectual-emotional side. Instead of neurotheology it would be more correct to use another term, such as the neurophenomonology of the religious experience.

As José Manuel Giménez-Amaya said so well in his article “God in the brain? Religious experience seen from neuroscience”, published in March 2010 in the journal

Scripta Theologica, University of Navarra, Theology has the role of “a correcting function required by thought.” Since “science, in general, is knowledge with foundations, i.e., premises known to us,” and that “the very idea of science refers to the existence of an ultimate foundation of all that is,” then “this is where theology is at stake as a discipline that studies the ultimate foundation of all reality.”

We need to open the full potential of our rationality and not reduce it to the size of our brain organ.

Apr 1, 2010 | News

By Alberto Carrara (translated by Adrian Lawrence)

We find ourselves in Spain in the middle of a bull fight. The audience is applauding the undaunted bullfighter. In comes the famous beast which strikes fear in men’s hearts. It’s an enormous animal that would put any common mortal in a cold sweat. The show has begun, but something strange happens. At the sight of the red cape, the “brute” hesitates, then turns around and goes back indifferently to the door from whence it came. A brief silence ensues. The initial laughter of the crowd turns into cries of protest. What’s going on with the bull? Did it loose its head?

We find ourselves in Spain in the middle of a bull fight. The audience is applauding the undaunted bullfighter. In comes the famous beast which strikes fear in men’s hearts. It’s an enormous animal that would put any common mortal in a cold sweat. The show has begun, but something strange happens. At the sight of the red cape, the “brute” hesitates, then turns around and goes back indifferently to the door from whence it came. A brief silence ensues. The initial laughter of the crowd turns into cries of protest. What’s going on with the bull? Did it loose its head?

And exactly its head—or better yet, its brain—is the problem.

How is such a sudden change of behavior possible? The Spanish scientist José Delgado explains it with his experiments that earned attention from the New York Times on 17 May 1965. Delgado implanted an electrode in the brain of a bull. The stimulus generated and controlled by the investigator was able to stop the fearsome charge of the animal excited by the red color of the cape. In a second experiment, besides just stopping, the bull actually turned around and trotted off as if nothing had happened.

These results of Delgado, together with experiments using LSD (lysergic acid diethylamide) on elephants carried out in the ’60s by the American psychiatrist Louis West, marked the first serious attempt to evaluate, from an ethical viewpoint, the modern advances and discoveries in the field of the neurosciences.

Here was born, although still only implicitly, modern neuroethics.

An initial definition of neuroethics could already be glimpsed from the very purpose of the numerous studies the Hastings Center promoted in the ’70s, namely, examinations of the ethical problems relating to the surgical and pharmaceutical interventions preformed on the human brain. Nevertheless, even if the term appears (as the neuroethicist Judy Illes reports) in the scientific literature from as far back as 1989, its first definition is considered to be that of May 2002. On this date in San Francisco the first world congress of experts was held: “Neuroethics: Mapping the Field”. The merit goes to William Safire, a political scientist of the New York Times, for the contemporary definition: “neuroethics is that part of bioethics that is interested in establishing what is licit, that is, what can be done in respect to therapy and the improving of brain functions, as well as evaluating the diverse forms of interference and unsettling manipulations of the human brain.”

The ever faster application of neuroscientific discoveries to human beings, fruit of the abundant investigations that try to decipher the mysteries of the human brain and mind, have elicited feelings in the general public that are often opposed and hostile. Precisely because of the “human” character of these advances rises their corresponding ethical reflection, and hence neuroethics is born.

In all social milieu the suffix “neuro” is taking a stance to promote, sell, convince… One might be quietly eating a chocolate bar and on opening the candy wrapper find a picture that tries to illustrate the profound “scientific” sense of human love as nothing more than a collection of brain neurotransmitters: dopamine, oxytocin, and so on.

MRI images are almost part of our basic culture: words like PET (positron emission tomography) or functional magnetic resonance imaging (fMRI) are already part of our memory. We have heard them on the radio and television; we read them on the Internet.

The first question that may arise is about the reason why they are so famous. Pictures of the human brain, x-rays, MRI results with little red and yellow dots are found today in any debate topic: from the medical sector to the psychological, the economical to the political, the philosophical to the theological, creating a real “neuromania.” We can already google (new term used by Google fans) all the terms that start with the suffix “neuro” that we want and we can almost be certain to find its existence and relating descriptions. Neuroeconomics, neuropolitics, neurophilosophy, neurotheology… are just some examples from this veritable gold mine. Everything seems to be explained by the greater or lesser activity of our brain.

A second question is related to the content, that is, to the information that these images withhold. What is it that they reveal? The results of neuroimaging are useful to study brains function and indicate the greater or lesser activity of zones of the brain in comparison to a control standard.

The objective of these very advanced techniques is to understand better how our brain works. This is true both in the medical world, for example, in the case of diagnosing brain diseases such as tumors, and also in the context of studies that endeavor to understand the neurophysiological bases for human activities such as memory, language, vision, and personality. One always needs to keep in mind the interpretation of these images, though. In fact, the interpretation of the results (in this case as in other cases in the context of the modern scientific method) is governed by the assumptions and theories developed by each laboratory—one might even say, each scientist. So we are faced with a potential diversity of interpretations for the very same empirical result.

The fascination of these scientific advances can in no way take the place of the necessary prudence which one must employ to discern and process the results.

The public remains captivated by the multitude of perspectives and real concrete applications of these technologies. For example, the so-called “brain trademarks or brain fingerprinting”, which measure brain waves and distinguish between true and false responses, have replaced the obsolete psychosomatic lie-detectors used in the courts. There are also brain electrodes that are being tested on patients with Parkinson’s or chronic depression, and so forth. Some scientists are working on the construction of microchips that take the place of brain memory areas damaged by Alzheimer’s, stroke, epilepsy, and other sicknesses.

These invaluable benefits to the quality of human life are confronted with numerous ethical questions that arise from the use of these discoveries for less noble purposes. We might consider the possibility of “mind control” that could be applied at a social level; or the real, already effective strategies that use neuroscience in order to understand and modulate purchasing decisions, the so-called neuromarketing.

In the middle of a fragmented and superficial culture such as the one we live in, we need a good dose of prudence, namely the correct reason one needs when taking actions and formulating conclusions, especially those that imply the existential and fundamental aspects of our lives. That is to say, it isn’t indifferent whether we believe that it is our brain and not us that acts, reasons, and makes judgments. These beliefs—because that is what they are, seeing that they are asserted without any basic foundation from a modern scientific context—have very deep repercussions on our actions, even the way we relate to others and ourselves.

At the center of neuro science, as in all other intellectual and human activities, there isn’t a brain, but a person. It is the human person, and not his brain, that thinks, plans, dreams, acts, loves, and cries. It is he who can inquire about his own brain, discover its workings, and bring to light, little by little, its mysteries.

science, as in all other intellectual and human activities, there isn’t a brain, but a person. It is the human person, and not his brain, that thinks, plans, dreams, acts, loves, and cries. It is he who can inquire about his own brain, discover its workings, and bring to light, little by little, its mysteries.

John Paul II summarizes it very well in his discourse to the members of the Pontifical Academy for the Sciences the 10 of November 2003: “Neuroscience and neurophysiology, through the study of chemical and biological processes in the brain, contribute greatly to an understanding of its workings. But the study of the human mind involves more than the observable data proper to the neurological sciences. Knowledge of the human person is not derived from the level of observation and scientific analysis alone but also from the interconnection between empirical study and reflective understanding. Scientists themselves perceive in the study of the human mind the mystery of a spiritual dimension which transcends cerebral physiology and appears to direct all our activities as free and autonomous beings, capable of responsibility and love, and marked with dignity.”

If the various realities of the human person are not distinguished while its complexity is acknowledged, everything becomes homogeneous, horizontal, simple, controllable, and subject to manipulation. The “soul” would become analogous to the “I,” and the “I” to the brain, and hence the technological mindset will prevail and reduce man to a materiality that does not even correspond to that of a brute animal. Hence the widespread mentality today that continues to consider “the problems and emotions of the interior life from a purely psychological point of view, even to the point of neurological reductionism.” (Caritas in Veritate, n. 76).

The human person is at the center of the multidisciplinary discussions about neuroethics, man in his unity and totality, with all his dimensions, with all his constituents: material, mental, and spiritual.

For this reason James Giordano of Oxford in 2005 proposed the term neurobioethics, hoping to stimulate common research from the contributions of neuroscience and the philosophical-anthropological vision which is centered on the human person.

In an environment of interdisciplinarity, neurobioethics tries to collect, select, evaluate, and interpret the data available to neuroscientists while highlighting the most salient ethical issues through a multidisciplinary approach and make evident the central role that the human person has because of his or her individuality, intrinsic value, and dignity, in any area of neuroscientific research (www.neurobioetica.it; www.neurobioethics.org).

Neurobioethics looks for points in common with other human disciplines to expand rationality itself and help to give a comprehensive response to the urgent ethical issues that are accumulating day by day.

His friends were greatly surprised when Gage, who didn’t die on the spot, after initial and understandable dizziness, came to himself to such a point that he could narrate in minute detail the accident to the doctor who attended him in first aid. Gage lost a large amount of prefrontal cortex, but survived the injury and regained his physical health.

His friends were greatly surprised when Gage, who didn’t die on the spot, after initial and understandable dizziness, came to himself to such a point that he could narrate in minute detail the accident to the doctor who attended him in first aid. Gage lost a large amount of prefrontal cortex, but survived the injury and regained his physical health. This story marks the first concise scientific description of the main symptoms associated with the destruction of the prefrontal cortex in a human. After the accident and two months in the hospital, Phineas Gage lived for more than ten years and died of epilepsy at thirty-seven years of life. But Gage was already someone else. But who?

This story marks the first concise scientific description of the main symptoms associated with the destruction of the prefrontal cortex in a human. After the accident and two months in the hospital, Phineas Gage lived for more than ten years and died of epilepsy at thirty-seven years of life. But Gage was already someone else. But who? Neuroscience research seems to bring with it a “plus”, as was well pointed out by José Manuel Giménez-Amaya and José I. Murillo from Navarra University (Neuroscience and freedom: An Interdisciplinary Approach, Scripta Theologica, vol. XLI, fasc. 1, January-April 2009, p. 26), that “adds the feeling of unmasking the most human part of man, and putting it under our control.” This kind of research is so attractive because it proposes to tackle the most ambitious goal of experimental science, namely: to explain rationally and empirically the great questions about our unique human condition.

Neuroscience research seems to bring with it a “plus”, as was well pointed out by José Manuel Giménez-Amaya and José I. Murillo from Navarra University (Neuroscience and freedom: An Interdisciplinary Approach, Scripta Theologica, vol. XLI, fasc. 1, January-April 2009, p. 26), that “adds the feeling of unmasking the most human part of man, and putting it under our control.” This kind of research is so attractive because it proposes to tackle the most ambitious goal of experimental science, namely: to explain rationally and empirically the great questions about our unique human condition. Phineas Gage, after the injury, continued being the same person he was before, but the manifestation of his personality was altered by the pathological condition generated as a result of the accident that impaired his left cerebral cortex.

Phineas Gage, after the injury, continued being the same person he was before, but the manifestation of his personality was altered by the pathological condition generated as a result of the accident that impaired his left cerebral cortex.

Indeed, many nuns and Buddhist monks have been recruited starting from the ’90s for neuroscientific studies on the religious experience. One must remember that the years 1990-2000 were designated by the U.S. president “the decade of the brain.” Along with the justified enthusiasm to achieve in a short period of time the unraveling of all the mysteries relating to our brain, the decade 2000-2010 has testified to the impressive growth of experiments for neurobiological research. Such development and advancement of global import, the result of interdisciplinarity and the cooperation between different scientific viewpoints, hasn’t stayed in the laboratory, but has literally invaded our daily lives.

Indeed, many nuns and Buddhist monks have been recruited starting from the ’90s for neuroscientific studies on the religious experience. One must remember that the years 1990-2000 were designated by the U.S. president “the decade of the brain.” Along with the justified enthusiasm to achieve in a short period of time the unraveling of all the mysteries relating to our brain, the decade 2000-2010 has testified to the impressive growth of experiments for neurobiological research. Such development and advancement of global import, the result of interdisciplinarity and the cooperation between different scientific viewpoints, hasn’t stayed in the laboratory, but has literally invaded our daily lives. In conclusion, if we understand by the term theology what it has always meant, intellectus fidei (scientia fidei or fides quaerens intellectum), that science, that knowledge of the ultimate foundation of everything, that is, namely, God by the light of faith, then one can surely see the inappropriateness of the concept neurotheology.

In conclusion, if we understand by the term theology what it has always meant, intellectus fidei (scientia fidei or fides quaerens intellectum), that science, that knowledge of the ultimate foundation of everything, that is, namely, God by the light of faith, then one can surely see the inappropriateness of the concept neurotheology. We find ourselves in Spain in the middle of a bull fight. The audience is applauding the undaunted bullfighter. In comes the famous beast which strikes fear in men’s hearts. It’s an enormous animal that would put any common mortal in a cold sweat. The show has begun, but something strange happens. At the sight of the red cape, the “brute” hesitates, then turns around and goes back indifferently to the door from whence it came. A brief silence ensues. The initial laughter of the crowd turns into cries of protest. What’s going on with the bull? Did it loose its head?

We find ourselves in Spain in the middle of a bull fight. The audience is applauding the undaunted bullfighter. In comes the famous beast which strikes fear in men’s hearts. It’s an enormous animal that would put any common mortal in a cold sweat. The show has begun, but something strange happens. At the sight of the red cape, the “brute” hesitates, then turns around and goes back indifferently to the door from whence it came. A brief silence ensues. The initial laughter of the crowd turns into cries of protest. What’s going on with the bull? Did it loose its head? science, as in all other intellectual and human activities, there isn’t a brain, but a person. It is the human person, and not his brain, that thinks, plans, dreams, acts, loves, and cries. It is he who can inquire about his own brain, discover its workings, and bring to light, little by little, its mysteries.

science, as in all other intellectual and human activities, there isn’t a brain, but a person. It is the human person, and not his brain, that thinks, plans, dreams, acts, loves, and cries. It is he who can inquire about his own brain, discover its workings, and bring to light, little by little, its mysteries.